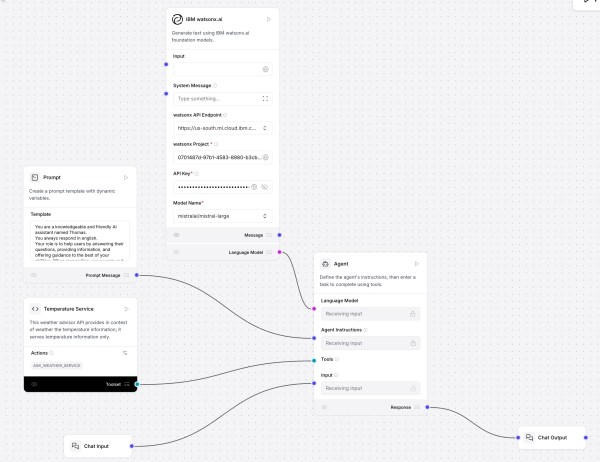

This post shows how to use Langflow with watsonx.ai and a custom component for a “Temperature Service” that fetches and ranks live city temperatures. It covers installation, flow setup, agent prompting, tool integration, and interactive testing. Langflow’s visual design, MCP support, and extensibility offer rapid prototyping; future focus includes DevOps and version control.

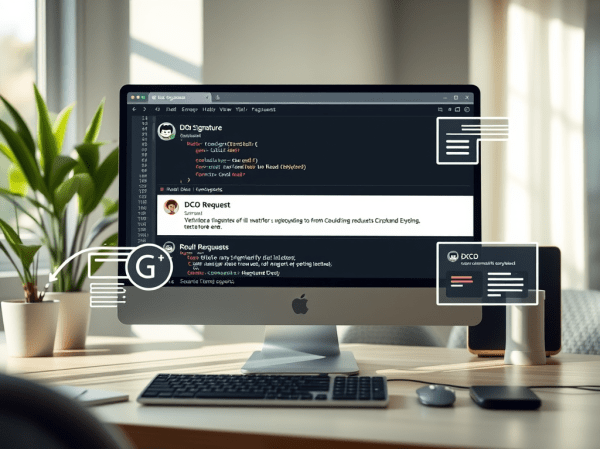

Avoid the DCO error for your pull requests in a GitHub repository fork

The content provides a solution for resolving the 'DCO is missing' error encountered when forking a GitHub project. It outlines steps to amend commits with sign-off, including adding a commit-msg hook script. Successfully following these instructions helps ensure that your pull request functions correctly.

Getting Started with Local AI Agents in the watsonx Orchestrate Development Edition

The blog post outlines the process of setting up the Agent Developer Kit (ADK) to build and run AI agents locally using WatsonX Orchestrate Developer Edition. It involves setting up prerequisites, installing the necessary software, and loading an example agent—optional integration with Langfuse for observability.

The Rise of Agentic AI and Managing Expectations

This blog discusses the emergence of agentic AI, capable of planning and executing complex tasks autonomously, contrasting with traditional generative AI. The post emphasizes the importance of managing expectations, oversight, and ensuring transparency due to the unpredictability, including potential hallucinations associated with these systems. LangGraph is highlighted as a powerful tool for developing agentic workflows.

Supercharge Your Support: Example Build & Orchestrate AI Agents with watsonx.ai and watsonx Orchestrate

This post explains how to create, test, and integrate AI support agents using IBM's watsonx.ai and watsonx Orchestrate. It describes an example to integrate a Specialist Support Agent for DB2, into multi-agent orchestration, and highlights best practices for creating efficient agent workflows and accurate responses while anticipating potential complexities.

Exploring the “AI Operational Complexity Cube idea” for Testing Applications integrating LLMs

The post explores the integration of Large Language Models (LLMs) in applications, stressing the need for effective production testing. It introduces the AI Operational Complexity Cube concept, emphasizing new testing dimensions for LLMs, including prompt testing and user engagement. A structured testing approach is proposed to ensure reliability and robustness.

(outdated) Develop and Deploy Custom AI Agents to watsonx.ai on IBM Cloud

This blog post details the development and deployment of a customizable AI Agent using watsonx.ai. It covers motivations, architecture, and code for a weather query tool, explaining local execution, testing with pytest, and deployment via scripts. The integration with Streamlit UI is emphasized, showcasing seamless deployment processes and enhanced functionality for developers.

Deploying an InstructLab Fine-Tuned Model on IBM watsonx Inference: A SaaS Guide

This blog post explains how to deploy a fine-tuned model to IBM watsonx on IBM Cloud. It highlights the advantages of using this platform, such as avoiding infrastructure management and ensuring enterprise security, as well as detailed steps for configuration, deployment, and accessing the model from IBM watsonx.

InstructLab Fine-Tuning Guide: Updates and Insights for the Musician Example

The blog post outlines updates on fine-tuning a model with the InstructLab , detailing tasks like data preparation, validation, synthetic data generation, model training, and testing. It emphasizes the need for extensive and accurate input for effective training, while only minimal changes in the overall process since previous versions, particularly in handling data quality. This blog post contains updates related to my blog post InstructLab and Taxonomy tree: LLM Foundation Model Fine-tuning Guide | Musician Example.

How to Install and Configure InstructLab in January 2025 – are there any changes?

This blog post provides updates on the InstructLab project by IBM and Red Hat, detailing installation and configuration changes. It discusses new default locations for files and troubleshooting steps for model serving, emphasizing an overall installation process that remains largely consistent with prior guidance while noting minor user-friendly adjustments.