The blog post explains the integration of a custom TypeScript tool, TravelAgentTool, into the Bee API and UI to extend the Bee Framework's functionality. It details the steps for setup, including modifying source files, configuring environment variables, and demonstrating its use in travel inquiries. Code instructions for implementation are provided throughout.

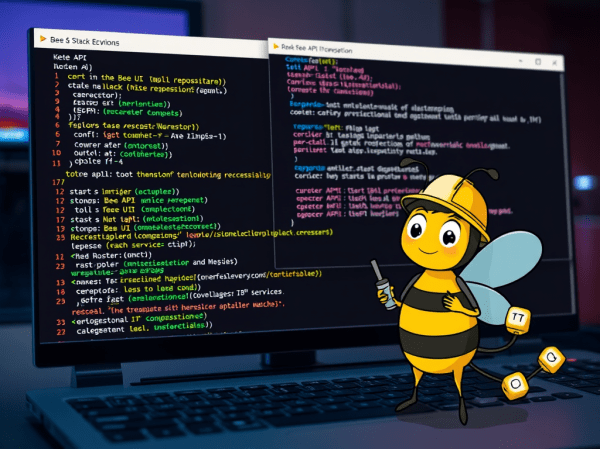

CheatSheet “Ready to Go” for Bee API and UI development

The content outlines the setup process for a development environment aimed at contributing to the Bee API and Bee UI repositories within the broader Bee Stack. It details the steps of cloning repositories, starting infrastructure, configuring .env files, and launching both the Bee API and UI servers, ensuring readiness for development.

Create a Full-Screen Web-Chat with watsonx Assistant, IBM Cloud Code Engine and watsonx.ai

The blog post shows integrating watsonx Assistant and watsonx.ai to create a full-screen user interface for interacting with a large language model (LLM) using minimal coding. It outlines the motivation, architecture, setup process, and specific actions necessary to deploy the integration on IBM Cloud Code Engine.

Bee Agent example for a simple travel assistant using a custom tool and observe the agent behavior in detail (Bee Framework 0.0.34 and watsonx.ai)

This blog post explains the implementation of a custom travel assistant agent using the Bee Agent Framework. It covers creating a tool to suggest vacation locations and utilizing weather data, integrating with MLFlow for observability. The article emphasizes practical execution steps, system requirements, and the motivation behind combining location and weather insights for user queries.

An Example of how use the “Bee Agent Framework” (v0.0.33) with watsonx.ai

This blog post explores the Bee Agent Framework integration with watsonx.ai, detailing the setup process for a weather agent example on MacOS. It discusses necessary installations, environment variable configurations, and code updates needed due to framework changes. The execution output illustrates how the agent retrieves current weather data for Las Vegas.

IBM Granite for Code models are available on Hugging Face and ready to be used locally with “watsonx Code Assistant”

IBM Granite for Code models on Hugging Face are beneficial for developers, allowing seamless integration with VS Code. They support 116 programming languages and are available under an Apache 2.0 license.

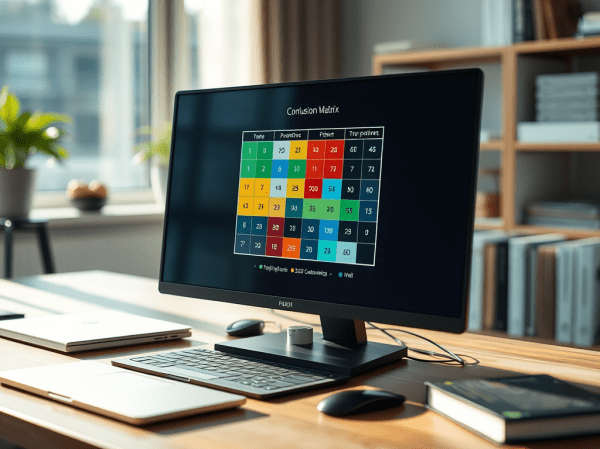

Land of Confusion using Classifications, and Metrics for a nonspecific Ground Truth

This blog post examines the Confusion Matrix as a metric for evaluating the performance of large language models (LLMs) in classification tasks, especially legal document analysis. It discusses the calculation of key classification metrics like Accuracy, Precision, Recall, and F1 score, emphasizing the challenges of using a broadly defined Ground Truth.

Integrating langchain_ibm with watsonx and LangChain for function calls: Example and Tutorial

The blog post demonstrates using the ChatWatsonx class of langchain_ibm for "function calls" with LangChain and IBM watsonx™ AI. It provides an example of a chat function call for weather information for various cities. The post also includes instructions to set up and run the example. Additional resources and examples are also provided.

InstructLab and Taxonomy tree: LLM Foundation Model Fine-tuning Guide | Musician Example

The blog post introduces InstructLab, a project by IBM and Red Hat, outlining the fine-tuning process of the model "MODELS/MERLINITE-7B-LAB-Q4_K_M.GGUF." This involves data preparation, model training, testing, and conversion, finally serving the model to verify its accuracy, by using a personal musician example.

Fine-tune LLM foundation models with the InstructLab an Open-Source project introduced by IBM and Red Hat

This blog post provides a step-by-step guide to setting up InstructLab CLI on an Apple Laptop with an Apple M3 chip, including an overview of InstructLab and its benefits. It also mentions supported models and detailed setup instructions. Additionally, it refers to a Red Hat YouTube demonstration and highlights the project's potential impact.