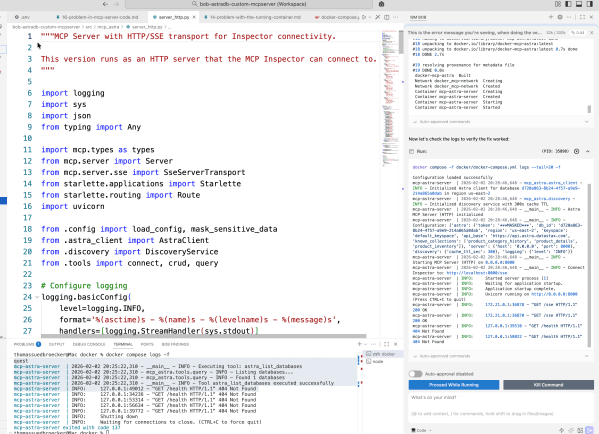

This blog post details the author's exploration of IBM Bob while building an MCP server for Astra DB. It emphasizes learning through experimentation in Code Mode, focusing on automation and iterative development. The author shares insights on prompt creation, workflow challenges, and the importance of documentation throughout the process, ultimately achieving a functional server setup.

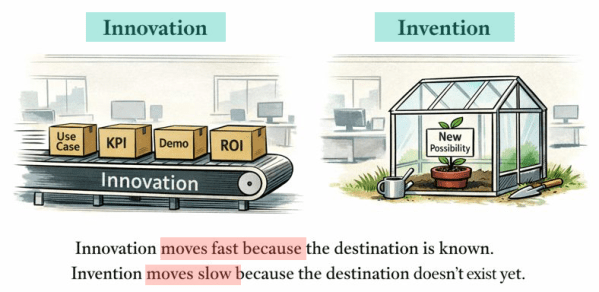

Innovation Is Eating Invention — and GenAI Is Accelerating It

The post discusses how the current focus on fast, outcome-driven innovation in the GenAI landscape risks sidelining invention, which nurtures genuine new possibilities. It emphasizes that while innovation thrives in KPI-oriented settings, invention often struggles for justification. The author calls for a deliberate balance to preserve the space for invention in future developments.

REST API Usage with the watsonx Orchestrate Developer Edition locally: An Example

This post outlines the process of setting up a local watsonx Orchestrate server and invoking a simple agent via REST API using Python. It covers environment setup, Bearer token retrieval, agent ID listing, and code execution.

Build, Export & Import a watsonx Orchestrate Agent with the Agent Development Kit (ADK)

This post guides users through building an AI agent locally using the watsonx Orchestrate Agent Development Kit (ADK), exporting it from their local setup, and importing it into a remote instance on IBM Cloud. The process enhances local development while ensuring efficient production deployment.

Reflecting on 2024: My Journey Through Innovation, AI, and Development

In 2024, I published 35 blog posts focusing on innovation in AI and development, emphasizing the Bee Agent Framework and IBM's watsonx.ai. The highlights including creating cheat sheets, exploring large language models, and providing practical guides. That boost my excitement for future challenges in 2025.

Fine-tune LLM foundation models with the InstructLab an Open-Source project introduced by IBM and Red Hat

This blog post provides a step-by-step guide to setting up InstructLab CLI on an Apple Laptop with an Apple M3 chip, including an overview of InstructLab and its benefits. It also mentions supported models and detailed setup instructions. Additionally, it refers to a Red Hat YouTube demonstration and highlights the project's potential impact.

AI Prompt Engineering: Streamlining Automation for Large Language Models

This blog post focuses on the importance of Prompt Engineering in AI models, particularly Large Language Models (LLMs), for reducing manual effort and automating validation processes. It emphasizes the need for automation to handle increasing test data and variable combinations, and discusses the use of the Watsonx.ai Prompt Lab for manual and initial automation processes. The post also highlights the significance of integrating automation with version control for consistency and reproducibility.

Getting started with Text Generation Inference (TGI) using a container to serve your LLM model

This blog post outlines a bash automation for setting up and testing Text Generation Inference (TGI) using a container. It provides instructions for creating a Python test client, starting the TGI server, and troubleshooting common issues. The post emphasizes the benefits of using containers and references the Hugging Face and Nvidia technologies.

How to save Elasticsearch query results using paging in a bash script automation

This blog post is a short cheat sheet about how to save Elasticsearch query results with paging in a bash script automation. The automation does download all the query results in JSON files as long the paging returns results in the hits of the return JSON. The files are numbered 01.json to XX.json.

Observe a running pod on IBM Cloud Code Engine with kubectl commands

In IBM Cloud Code Engine you also can use kubectl commands to get information about your running application in addition to the IBM Cloud Code Engine CLI.