This blog post details the author's exploration of IBM Bob while building an MCP server for Astra DB. It emphasizes learning through experimentation in Code Mode, focusing on automation and iterative development. The author shares insights on prompt creation, workflow challenges, and the importance of documentation throughout the process, ultimately achieving a functional server setup.

A Bash Cheat Sheet: Adding a Local Ollama Model to watsonx Orchestrate

The post discusses automating local testing of IBM watsonx Orchestrate with Ollama models using a Bash script. The script simplifies the setup process, ensuring proper connections and configurations. It initiates services, confirms model accessibility, reducing typical setup errors.

A Bash Cheat Sheet: Adding a Model to Local watsonx Orchestrate

The this post describes a Bash automation script for setting up the IBM watsonx Orchestrate Development Edition. The script automates tasks like resetting the environment, starting the server, and configuring credentials, allowing for a more efficient workflow. It addresses common setup issues, ensuring a repeatable and successful process.

RAG is Dead … Long Live RAG

The post explains why traditional Retrieval-Augmented Generation (RAG) approaches no longer scale and how modern architectures, including GraphRAG, address these limitations. It highlights why data quality, metadata, and disciplined system design matter more than models or frameworks, and provides a practical foundation for building robust RAG systems, illustrated with IBM technologies but applicable far beyond them.

Update Ollama to use Granite 4 in VS Code with watsonx Code Assistant

This post is about setup to utilize Granite 4 models in Ollama for VS Code with watsonx Code Assistant. The process includes inspecting available models, uninstalling old versions, installing new models, and configuring them for effective use. The experience emphasizes exploration and learning in a private, efficient AI development environment.

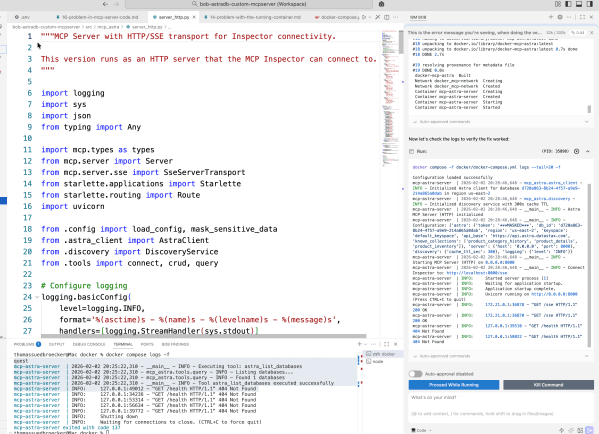

Access watsonx Orchestrate functionality over an MCP server

The Model Context Protocol (MCP) is being increasingly utilized in AI applications, particularly with the watsonx Orchestrate ADK. This setup allows users to develop and manage agents and tools through a seamless integration of the MCP server and the Development Edition, enhancing user interaction and functionality in coding environments.

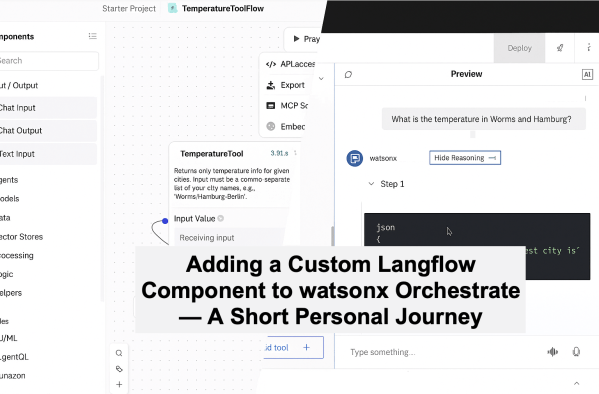

Adding a Custom Langflow Component to watsonx Orchestrate — A Short Personal Journey

This blog post outlines a practical example of setting up a custom component in Langflow to connect with an external weather API and import it into the watsonx Orchestrate Development Edition. The process emphasizes learning through experimentation rather than achieving a flawless solution, highlighting the potential of Langflow and watsonx Orchestrate for AI development.

It’s All About Risk-Taking: Why “Trustworthy” Beats “Deterministic” in the Era of Agentic AI

This post explores how Generative AI and Agentic AI emphasize trustworthiness over absolute determinism. As AI's role in enterprises evolves, organizations must focus on building reliable systems that operate under risk, balancing innovation with accountability. A personal perspective.

How to Build a Knowledge Graph RAG Agent Locally with Neo4j, LangGraph, and watsonx.ai

The post discusses integrating Knowledge Graphs with Retrieval-Augmented Generation (RAG), specifically using Neo4j and LangGraph. It outlines an example setup where extracted document data forms a structured graph for querying. The system enables natural question-and-answer interactions through AI, enhancing information retrieval with graph relationships and embeddings.

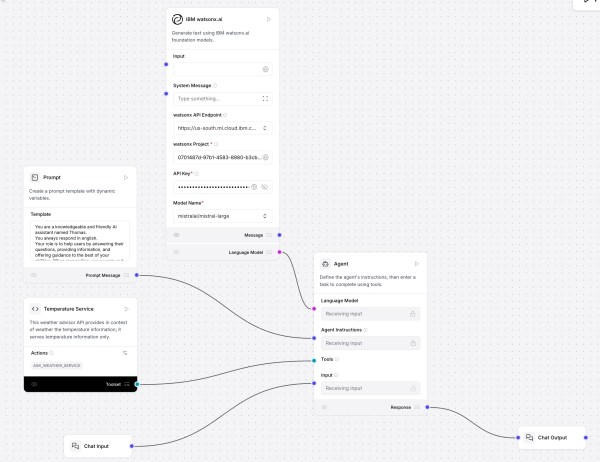

Create Your First AI Agent with Langflow and watsonx

This post shows how to use Langflow with watsonx.ai and a custom component for a “Temperature Service” that fetches and ranks live city temperatures. It covers installation, flow setup, agent prompting, tool integration, and interactive testing. Langflow’s visual design, MCP support, and extensibility offer rapid prototyping; future focus includes DevOps and version control.