IBM Granite for Code models on Hugging Face are beneficial for developers, allowing seamless integration with VS Code. They support 116 programming languages and are available under an Apache 2.0 license.

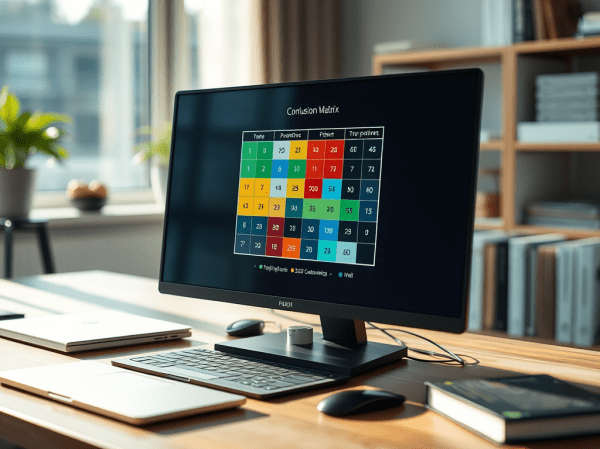

Land of Confusion using Classifications, and Metrics for a nonspecific Ground Truth

This blog post examines the Confusion Matrix as a metric for evaluating the performance of large language models (LLMs) in classification tasks, especially legal document analysis. It discusses the calculation of key classification metrics like Accuracy, Precision, Recall, and F1 score, emphasizing the challenges of using a broadly defined Ground Truth.

InstructLab and Taxonomy tree: LLM Foundation Model Fine-tuning Guide | Musician Example

The blog post introduces InstructLab, a project by IBM and Red Hat, outlining the fine-tuning process of the model "MODELS/MERLINITE-7B-LAB-Q4_K_M.GGUF." This involves data preparation, model training, testing, and conversion, finally serving the model to verify its accuracy, by using a personal musician example.

Using CUDA and Llama-cpp to Run a Phi-3-Small-128K-Instruct Model on IBM Cloud VSI with GPUs

The popularity of llama.cpp and optimized GGUF format for models is growing. This post outlines steps to run "Phi-3-Small-128K-Instruct" in GGUF format with llama.cpp on an IBM Cloud VSI with GPUs and Ubuntu 22.04. It covers VSI setup, CUDA toolkit, compilation, Python environment, model usage, and additional resources.

CheatSheet: How to ensure you use the right Python environment in VS Code interpreter settings?

This post covers to ensure you set the virtual environment for Python in VS Code using venv. It details creating and activating a Python venv, and ensuring it’s used in VS Code environments. The steps include opening the VS Code command palette, selecting an interpreter, and navigating to the pyvenv.cfg file.