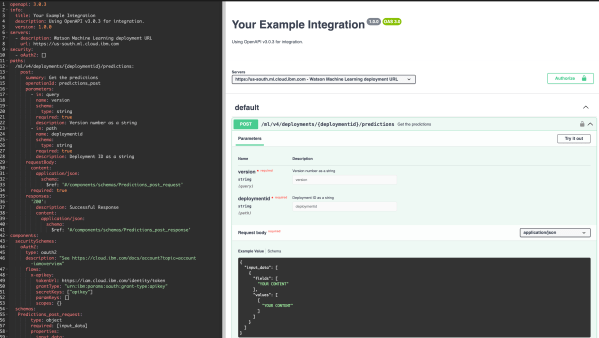

This blog post is about how to define a custom Open API specification` for Watson Machine Learning - IBM Cloud deployment to integrate it into watsonx Assistant. The Watson Machine Learning deployments make it easy for data scientists to write AI Prototypes to be integrated into applications because they can use Jupyter Notebooks and Python they are used to without knowing how to write containers and set up runtimes; they can deploy, and the developers can consume the AI functionalities they have implemented via a REST API.

How to create a FastAPI server to use OpenAI models

Last time, I wrote a blog post about "IBM Watsonx.ai and a simple question-answering pipeline using Python and FastAPI", and I had an exchange with my family about an OpenAI sample for a FastAPI application, so I created a small FastAPI server to access OpenAI with Python.