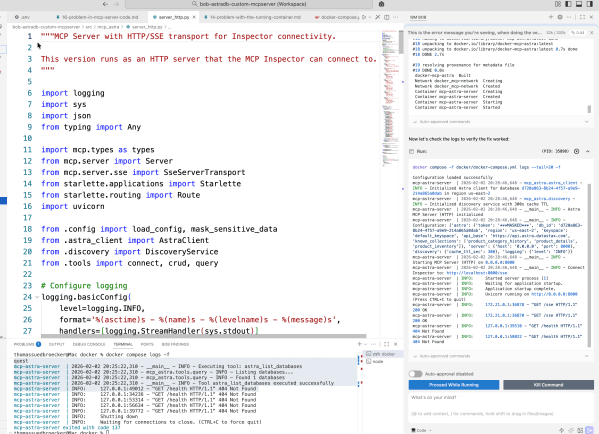

This blog post details the author's exploration of IBM Bob while building an MCP server for Astra DB. It emphasizes learning through experimentation in Code Mode, focusing on automation and iterative development. The author shares insights on prompt creation, workflow challenges, and the importance of documentation throughout the process, ultimately achieving a functional server setup.

REST API Usage with the watsonx Orchestrate Developer Edition locally: An Example

This post outlines the process of setting up a local watsonx Orchestrate server and invoking a simple agent via REST API using Python. It covers environment setup, Bearer token retrieval, agent ID listing, and code execution.

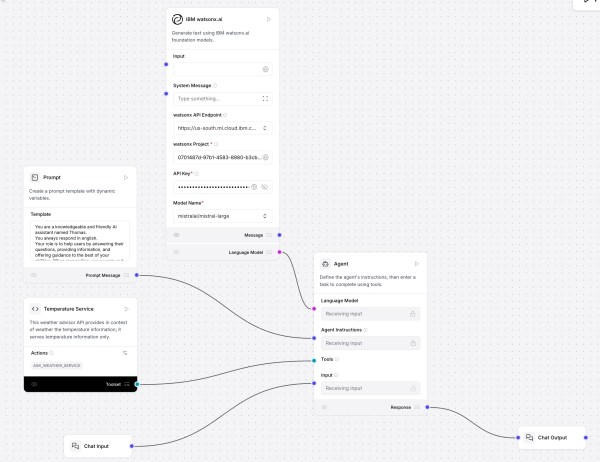

Create Your First AI Agent with Langflow and watsonx

This post shows how to use Langflow with watsonx.ai and a custom component for a “Temperature Service” that fetches and ranks live city temperatures. It covers installation, flow setup, agent prompting, tool integration, and interactive testing. Langflow’s visual design, MCP support, and extensibility offer rapid prototyping; future focus includes DevOps and version control.

(outdated) Develop and Deploy Custom AI Agents to watsonx.ai on IBM Cloud

This blog post details the development and deployment of a customizable AI Agent using watsonx.ai. It covers motivations, architecture, and code for a weather query tool, explaining local execution, testing with pytest, and deployment via scripts. The integration with Streamlit UI is emphasized, showcasing seamless deployment processes and enhanced functionality for developers.

Simplified Example to build a Web Chat App with watsonx and Streamlit

This blog post describes a web chat application using a large language model on watsonx, with the interface built in Streamlit.io. It focuses on motivation, architecture, code sections, and local setup, featuring basic authentication and options for user interaction. The author highlights Streamlit’s rapid prototyping capabilities and ease of use with Python.

Create a Custom Bee Agent with a Custom Python Weather Tool: A Step-by-Step Guide

This blog post explains how to integrate a custom Python tool into the Bee Agent using the Bee UI, focusing on real-time weather data retrieval. It outlines the setup process, agent customization, and testing to ensure functionality. Clear descriptions and agent interactions enhance the tool's efficacy and future applications.

Enhance the LangChain AI Agent Weather Query Example with a Dependency Graph Visualization

This blog post demonstrates how to simply add a dependency graph to a runnable chain for a LangChain AI Agent example with WatsonxLLM for a Weather Queries application.

Implementing LangChain AI Agent with WatsonxLLM for a Weather Queries application

This blog post describes the customization of the LangChain AI Agent example from IBM Developer using Watsonx in Python. It demonstrates the implementation of a weather query application with detailed steps. The post offers insight into model parameters, creating prompts, agent chains, tool definitions, and execution. Additionally, it provides links to additional resources for further exploration.

Does it work to use ChatWatsonx from langchain_ibm to implement an agent that invokes functions?

The blog post explores integrating ChatWatsonx with LangChain for function calls, using a weather example. It aims to understand AI agent tools and actions. The process includes defining tools functions, creating WatsonxChat instance, and implementing a structured ChatPromptTemplate. While not fully successful, it highlights the importance of the prompt.

Integrating langchain_ibm with watsonx and LangChain for function calls: Example and Tutorial

The blog post demonstrates using the ChatWatsonx class of langchain_ibm for "function calls" with LangChain and IBM watsonx™ AI. It provides an example of a chat function call for weather information for various cities. The post also includes instructions to set up and run the example. Additional resources and examples are also provided.