This blog post discusses the creation of a custom Bee Agent that operates independently from the Bee Stack and interacts in German. It explores requirements, agent examples, coding in TypeScript, and GitHub references. The author implements an agent using a specific system prompt while addressing the challenges of ensuring consistent output in German.

Simplified Example to build a Web Chat App with watsonx and Streamlit

This blog post describes a web chat application using a large language model on watsonx, with the interface built in Streamlit.io. It focuses on motivation, architecture, code sections, and local setup, featuring basic authentication and options for user interaction. The author highlights Streamlit’s rapid prototyping capabilities and ease of use with Python.

Reflecting on 2024: My Journey Through Innovation, AI, and Development

In 2024, I published 35 blog posts focusing on innovation in AI and development, emphasizing the Bee Agent Framework and IBM's watsonx.ai. The highlights including creating cheat sheets, exploring large language models, and providing practical guides. That boost my excitement for future challenges in 2025.

Create a Custom Bee Agent with a Custom Python Weather Tool: A Step-by-Step Guide

This blog post explains how to integrate a custom Python tool into the Bee Agent using the Bee UI, focusing on real-time weather data retrieval. It outlines the setup process, agent customization, and testing to ensure functionality. Clear descriptions and agent interactions enhance the tool's efficacy and future applications.

My Bee Agent Framework and watsonx.ai development Learning Journey

The content shows my Bee Agent Framework and Bee Stack development learning journey, focusing on their integration with the watsonx.ai. It covers the setup process for different agent applications, including a weather retrieval agent and a travel assistant. It also provides guidance for contributing to the development of Bee API and UI and configuring Podman.

A Bee API and Bee UI development example for adding a TypeScript tool made for the Bee Agent Framework

The blog post explains the integration of a custom TypeScript tool, TravelAgentTool, into the Bee API and UI to extend the Bee Framework's functionality. It details the steps for setup, including modifying source files, configuring environment variables, and demonstrating its use in travel inquiries. Code instructions for implementation are provided throughout.

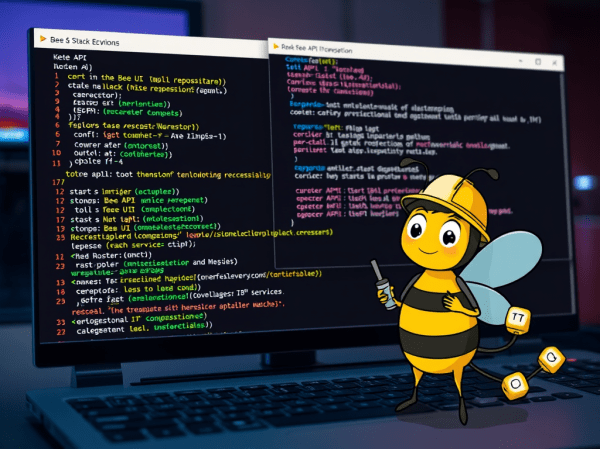

CheatSheet “Ready to Go” for Bee API and UI development

The content outlines the setup process for a development environment aimed at contributing to the Bee API and Bee UI repositories within the broader Bee Stack. It details the steps of cloning repositories, starting infrastructure, configuring .env files, and launching both the Bee API and UI servers, ensuring readiness for development.

Create a Full-Screen Web-Chat with watsonx Assistant, IBM Cloud Code Engine and watsonx.ai

The blog post shows integrating watsonx Assistant and watsonx.ai to create a full-screen user interface for interacting with a large language model (LLM) using minimal coding. It outlines the motivation, architecture, setup process, and specific actions necessary to deploy the integration on IBM Cloud Code Engine.

Bee Agent example for a simple travel assistant using a custom tool and observe the agent behavior in detail (Bee Framework 0.0.34 and watsonx.ai)

This blog post explains the implementation of a custom travel assistant agent using the Bee Agent Framework. It covers creating a tool to suggest vacation locations and utilizing weather data, integrating with MLFlow for observability. The article emphasizes practical execution steps, system requirements, and the motivation behind combining location and weather insights for user queries.

An Example of how use the “Bee Agent Framework” (v0.0.33) with watsonx.ai

This blog post explores the Bee Agent Framework integration with watsonx.ai, detailing the setup process for a weather agent example on MacOS. It discusses necessary installations, environment variable configurations, and code updates needed due to framework changes. The execution output illustrates how the agent retrieves current weather data for Las Vegas.