The popularity of llama.cpp and optimized GGUF format for models is growing. This post outlines steps to run "Phi-3-Small-128K-Instruct" in GGUF format with llama.cpp on an IBM Cloud VSI with GPUs and Ubuntu 22.04. It covers VSI setup, CUDA toolkit, compilation, Python environment, model usage, and additional resources.

AI Prompt Engineering: Streamlining Automation for Large Language Models

This blog post focuses on the importance of Prompt Engineering in AI models, particularly Large Language Models (LLMs), for reducing manual effort and automating validation processes. It emphasizes the need for automation to handle increasing test data and variable combinations, and discusses the use of the Watsonx.ai Prompt Lab for manual and initial automation processes. The post also highlights the significance of integrating automation with version control for consistency and reproducibility.

Fine-tune a large language model (llm) for multi-turn conversations and run it on a Text Generation Inference (TGI) server

This blog post delves into the initial fine-tuning process for large language models (LLMs) for multi-turn conversations and their deployment on Text Generation Inference (TGI) servers. It covers topics such as use cases, data formats, training data preparation, server setup, and evaluation frameworks. The goal is to guide readers through the process of fine-tuning and deploying LLMs.

Getting started with Text Generation Inference (TGI) using a container to serve your LLM model

This blog post outlines a bash automation for setting up and testing Text Generation Inference (TGI) using a container. It provides instructions for creating a Python test client, starting the TGI server, and troubleshooting common issues. The post emphasizes the benefits of using containers and references the Hugging Face and Nvidia technologies.

CheatSheet: How to add users to your watsonx project?

This cheat sheet provides a two-step guide for adding users to your watsonx project in IBM Cloud.

How to create a FastAPI server to use OpenAI models

Last time, I wrote a blog post about "IBM Watsonx.ai and a simple question-answering pipeline using Python and FastAPI", and I had an exchange with my family about an OpenAI sample for a FastAPI application, so I created a small FastAPI server to access OpenAI with Python.

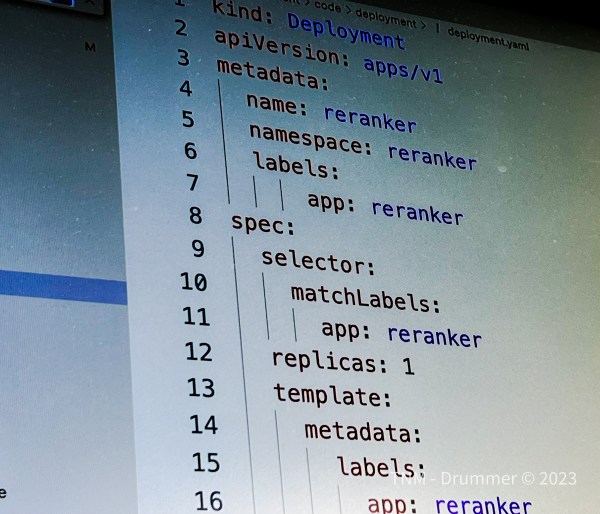

A custom Reranker deployment on Kubernetes

The objective of this project is to deploy the Reranker to a Kubernetes cluster in a VPC on IBM Cloud and access the REST API of the Reranker. The Reranker is a component of PrimeQA.

Some fun with “Watson Text to Speech” and voice model customization

My last blog post was about Watson Speech to Text language model customization and this blog post is about IBM Cloud Watson Text to Speech (TTS) custom voice model configuration. Because, now it's time to have some fun with the Watson TTS service. I created a fun customisation of the service that the German pronunciation sounds a little bit like the palatinate dialect. Here are the differences with two wav file I created with a custom Watson to Text to Speech voice model.

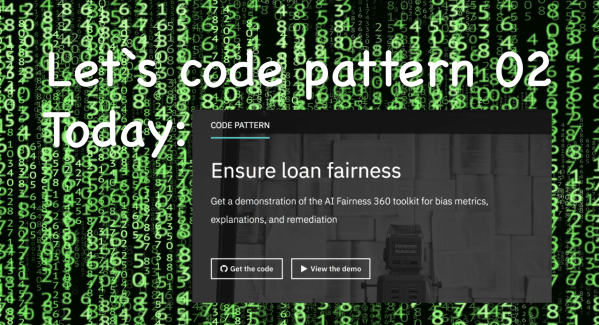

Let‘s Code Pattern 02: Diversity and Inclusion – ensure loan fairness

The good combination of IBM coder program and code patterns motivated me to dig in into the data science area a little bit, relating to the real-world topic diversity and inclusion . Also it was interesting to see: How the AI Fairness 360 toolkit, which is an open-source library to help detect and remove bias in machine learning models,... Continue Reading →

big open source players were “cap by hat” @w-jax

I was at winter-jax 2018 in Munich last week. This was my first time I attended this big developer conference. As you can see in the headline picture, two big players in open source area with cap by hat and IBM, RedHat booths and with Viada 😉 One major topic this week was the potential deal, IBM is planning to buy RedHat... Continue Reading →