This blog post is about, how to create a custom dictionary model for Watson NLP. One capability of the Watson NLP is the "Entity extraction to find mentions of entities (like person, organization, or date)." We will adapt the Watson NLP model to extract entities from a given text to find single entities like names and locations which are identified by an entry and its label.

Run Watson NLP for Embed in a KServe ModelMesh Serving environment on an IBM Cloud Kubernetes cluster in a VPC environment

This blog post is about to run Watson NLP for Embed example in a KServe ModelMesh Serving environment on an IBM Cloud Kubernetes cluster in a Virtual Private Cloud environment and reuses parts of the IBM Watson Libraries for Embed documentation.

Run Watson NLP for Embed on an IBM Cloud Kubernetes cluster in a Virtual Private Cloud environment

This blog post is about to deploy the IBM Watson Natural Language Processing Library for Embed to an IBM Cloud Kubernetes cluster in a Virtual Private Cloud (VPC) environment and is related to my blog post Run Watson NLP for Embed on IBM Cloud Code Engine. IBM Cloud Kubernetes cluster is a “certified, managed Kubernetes solution, built for creating a cluster of compute hosts to deploy and manage containerized apps on IBM Cloud“.

Run Watson NLP for Embed on IBM Cloud Code Engine

This blog post is about using the IBM Watson Natural Language Processing Library for Embed on IBM Cloud Code Engine and is related to my blog post Run Watson NLP for Embed on your local computer with Docker. IBM Cloud Code Engine is a fully managed, serverless platform where you can run container images or batch jobs.

Run Watson NLP for Embed on your local computer with Docker

This blog post is about using the IBM Watson Natural Language Processing Library for Embed on your local computer with Docker. The IBM Watson Libraries for Embed are made for IBM Business Partners. Partners can get additional details about embeddable AI on the IBM Partner World page. If you are an IBM Business Partner you can get a free access to the IBM Watson Natural Language Processing Library for Embed. To get started with the libraries you can use the link Watson Natural Language Processing Library for Embed home. It is an awesome documentation and it is public available.

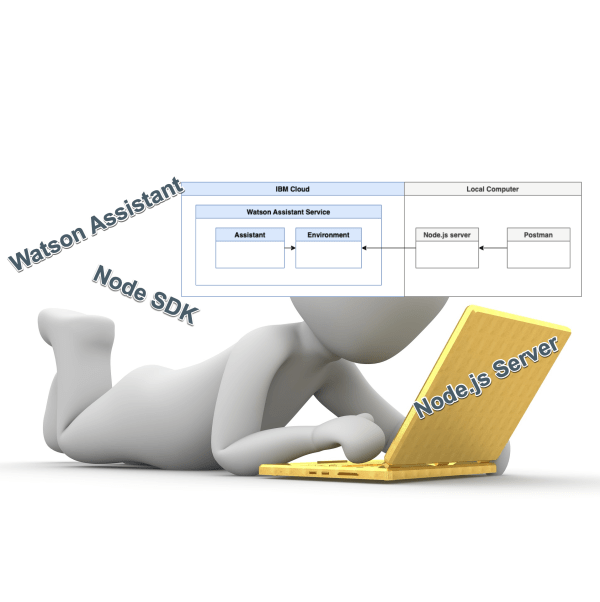

Simple Node.js server example using the Watson Assistant API v2

This blog post is about a simple example to use the Watson Assistant API v2 with the Node.js SDK to get a Watson Assistant sessionID and send a message to Watson Assistant using this sessionID. Here is the GitHub project watson-assistant-simple-node-js-server-example.

Watson Speech to Text language model customization

This blog post is about IBM Cloud Watson Speech to Text (STT) language model customization. Currently I took a look at the IBM Cloud Watson Assistant service used to build conversational assistants. A conversation leads potentially to speech input of users, which needs to be converted to text to be processed using AI for example the NLU.

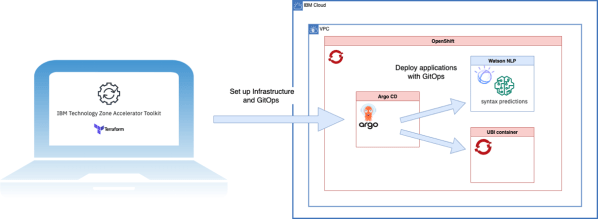

Everything as Code and easy automation with minimal Terraform and GitOps knowledge

Infrastructure as Code and GitOps are ongoing big topics related to DevOps and CI/CD which needs effective automation to shorter the Software Development Lifecycle and simplify production deployments. In this blog post we don't talk much about these processes and methodologies. The blog post is more about how to reduce efforts to build an automation by using the IBM Accelerator Toolkit.

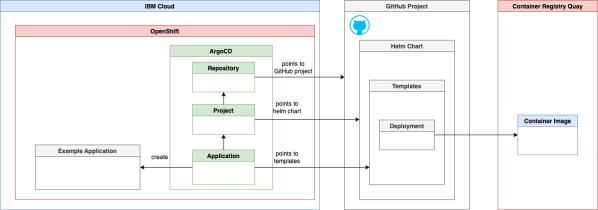

How to use a declarative setup for Argo CD to deploy an application using a Helm repository?

In this blog post I will cover the topic, how to use Argo CD - Declarative Setup to deploy an application using Helm repository.

Use IasCable to create a Virtual Private Cloud and a Red Hat OpenShift cluster on IBM Cloud

In that blog post we use the IasCable framework to create a Virtual Private Cloud and a Red Hat OpenShift cluster on IBM Cloud. I covered the starting point for the IasCable framework in my last blog post “Get started with an installable component infrastructure by selecting components from a catalog of available modules with IasCable“