The objective is to set up a development environment for contributing to Bee API and Bee UI repositories. This is not about using the Bee Framework; it is about the broader Bee Stack.

The blog post outlines the current setup process (2024.12.03) for a development environment aimed at contributing to the Bee API and Bee UI repositories within the broader Bee Stack. It details the steps of cloning repositories, starting infrastructure, configuring .env files, and launching both the Bee API and UI servers, ensuring readiness for development.

For example we may later want add a custom TypeScript Bee Agent or a TypeScript custom Tool. Therefore, we need to start developing the Bee Stack. We need to change the implementation in the Bee API and Bee UI.

The image below shows the simplified architecture. As you can see, we will only work in the Bee UI and Bee API repositories in development mode; the remaining needed services will be started as infrastructure.

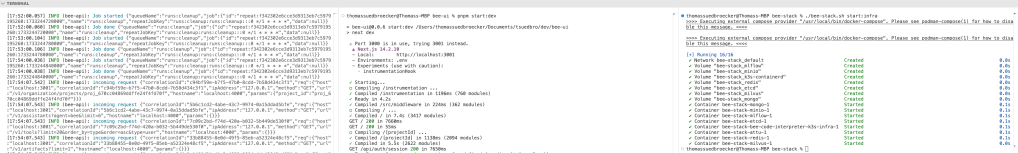

We will open three terminals.

Note: This CheatSheet was verified with MacOS installed and Podman as the container engine. The following instructions are only extracts for the repository documentation. In case of problem please verify the documentation inside the cloned and working repository.

Table of content

- Clone three repositories

- Start the basic Bee infrastructure

- Optional: Add additional content to the infrastructure start

- Start the Bee API

- Start the Bee UI

1. Clone three repositories

We will use three repositories from the Bee project. The best is to create a root folder where we clone the following repositories:

2. Start the basic Bee infrastructure

Open a new terminal and navigate to the root folder where you cloned all three repositories.

To work with the UI and API, we need to start the basic infrastructure, which will start all required databases, mlflow, and Python interpreter.

We must create a new .env file with the default values of the .env_template. In our situation, no additional configuration is needed for the environment variables; we can work with the default.

./bee-stack/cat example.env > .env

./bee-stack/bee-stack.sh start:infra

Note: In case of problems later, ensure the databases were initialized by starting the entire stack, then stopping the stack and starting only the infrastructure.

./bee-stack/bee-stack.sh start

./bee-stack/bee-stack.sh stop

./bee-stack/bee-stack.sh start:infra

Note: Optional clean the data with.

./bee-stack/bee-stack.sh clean

./bee-stack.sh setup

Before, you should also set the Watsonx, for example, to your inference runtime for a model and define a model by running the setup or generating an .env file.

# Choose backend: watsonx/ollama/bam/openai

LLM_BACKEND=watsonx

EMBEDDING_BACKEND=watsonx

# Watsonx

WATSONX_PROJECT_ID=...

WATSONX_API_KEY=...

WATSONX_REGION=us-south

3. Optional: Add additional content to the infrastructure start

Note: If we want to add additional content to the infrastructure start, we need to edit the bee-stack/docker-compose.yml file. For example, we should add infra to profiles: [ all, infra ] in this example when we want the bee-observe to also be available as infra for our development.

bee-observe:

image: icr.io/i-am-bee/bee-observe:0.0.4

depends_on:

- mlflow

- mongo

- redis

...

ports:

- "3009:3009"

profiles: [ all, infra ]

4. Start the Bee API

Now we can start the Bee API.

- Ensure you created a new

.envfile with the default values of the.env_template, or you customize the.envto your model inference. In this case, we use .

./bee-api/cat .env_template > .env

Insert the value to connect to watsonx.ai.

# https://www.ibm.com/products/watsonx-ai

export WATSONX_REGION="eu-de"

export WATSONX_PROJECT_ID="XXX"

export WATSONX_API_KEY="XXX"

export WATSONX_MODEL="meta-llama/llama-3-1-70b-instruct"

Open a new terminal and navigate to the root folder where you cloned all three repositories.

- Install the dependencies

./bee-api/pnpm install

- Run the following command and wait for 10 sec

The command will set up the database for the initial usage. Run pnpm run mikro-orm seeder:run and stop it after about 10 seconds.

mikro-orm seeder:run: Seed the database using the seeder class

./bee-api/pnpm run mikro-orm seeder:run

Stop it with ctrl C.

- Start the Bee API server

./bee-api/pnpm start:dev

- Open a browser and

Bee APIswagger UI

http://0.0.0.0:4000/docs

Note: You can log on with the DUMMY_JWT_TOKEN saved in the Bee UI .env file.

5. Start the Bee UI

Open a new terminal and navigate to the root folder where you cloned all three repositories. Now it is time to start the Bee UI.

The Bee UI contains a .env file that includes a DUMMY_JWT_TOKEN you can use for the authentication in the Bee API.

In this situation, we don’t need to create a new environment file; we can use the existing one. But we need to configure the file a bit.

- Create a

NEXTAUTH_SECRETfor the.env.

openssl rand -base64 32

Insert the resulting value to the variable.

NEXTAUTH_SECRET="XXXX"

Example of the resulting environment file.

# Create .env.local to overwrite any variables, see .env.example

API_URL=http://localhost:4000/

# Bypass authentication with jwt token from the API well-known file

DUMMY_JWT_TOKEN="SEE IN THE FILE"

NEXT_PUBLIC_APP_NAME="Bee"

NEXT_PUBLIC_FEATURE_FLAGS='{"Knowledge":true,"Files":true,"FunctionTools":true}'

NEXT_PUBLIC_ORGANIZATION_ID_DEFAULT=org_670cc04869ddffe24f4fd70d

NEXT_PUBLIC_PROJECT_ID_DEFAULT=proj_670cc04869ddffe24f4fd70f

# Sensitive secret used to sign nextauth jwts

# You can use `$ openssl rand -base64 32` to generate new one

NEXTAUTH_SECRET="YOUR CREATED SSL"

Now we can start the UI.

- Install the dependencies

./bee-ui/pnpm install

- Start the

Bee UI serverin the development mode

./bee-ui/pnpm start:dev

- Open a browser and Bee UI

Ensure you use chrome as your browser. Note: Safari maybe force usinghttps and not http.

http://localhost:3000/

Example usage:

Now we are ready to go.

I hope this was useful to you and let’s see what’s next?

Greetings,

Thomas

#watsonx, #typescript, #ai, #ibm, #agents, #ai, #beagentframework, #beagent, #aiagents, #podman, #tools, #agents, #mlflow, #beeui, #beeapi