This blog post is a (bigger) cheat sheet about:

- How to setup a simple Ngnix as a load balancer on a Virtual Server Instance (VSI) that runs in a Virtual Private Cloud (VPC)?

- How to configure a Red Hat OpenShift project, to allow a REST endpoint invocation of an application inside the OpenShift project from a VSI instance that runs in the same VPC as the Red Hat OpenShift cluster?

We don’t want to discuss:

- Is this a good setup?

- What could be a motivation to setup such scenario?

The blog post is just about, how to setup an example and how does it work. This blog post does reference different official documentation resources or other blog posts.

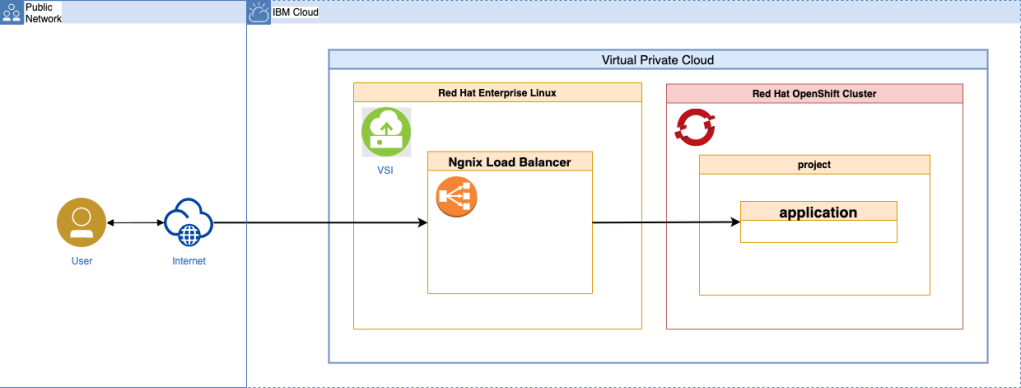

The diagram below shows a simplified overview.

- Virtual Private Cloud (VPC)

- Ngnix load balancer

- Virtual Server Instance (VSI)

- Red Hat OpenShift cluster and project

- Example application

All of the resources that are listed above, are running on IBM Cloud.

We will cover two main topics for that setup:

- We will discover a simplified architecture overview.

- We will follow steps to set up an example instance of the configuration on

IBM Cloud. These are the steps of the setup onIBM Cloud.- Setup

VPCandOpenShifton IBM Cloud. - Deploy an

example applicationtoOpenShift. - Create

private load balanceras aservicefor theexample application. - Setup VSI and configure Ngnix as

load balancer. - Verify the setup is working.

- Setup

Architecture overview¶

Let me divide the architecture overview in two simplified diagrams.

- This first diagram does focus on the dependencies of the created

IBM Cloudresources.

The red marked lines do display the invocation sequence for the endpoint for our example application from the internet, which runs in OpenShift.

- Access the endpoint provided by the

floating IPaddress ofVSIin theVPC network. - Route from

Ngnix load balancerrunning on theVSI instanceto the private load balancer in theVPC network. - Access the

OpenShiftcluster using a service.

The following table does contain the resources you see in the diagram above.

| Resouces | Notes |

|---|---|

| VPC (Virual Private Cloud) | The private network in the IBM Cloud. |

| Routing Table | Define routes with routing table for your own defined traffic. |

| Security Groups | Acting as firewall to protect worker nodes and VSI. |

| Public Gateway | Access the external internet from the worker nodes. |

| Subnet | In this example situation only one subnet is used. |

| Load Balancer (public) | Access the cluster exposed route from the internet. |

| Load Balancer (private) | Route internal traffic to a specific application inside the OpenShift cluster. |

| Virtual Private Endpoint Gateway | Enables the VPC to connect to supported IBM Cloud services. |

| Cloud Object Storage | Connected to the OpenShift cluster. |

| Red Hat OpenShift on IBM Cloud (VPC) | A OpenShift cluster with two worker nodes. |

| Virtual Server Instance (VSI) | An instance with Red Hat Enterprise Linux 8.4. installed. |

- The second one does focus on the dependencies of the

exampleapplication

The red marked lines do display the invocation sequence from the endpoint on the internet to the running application in OpenShift.

- Access the endpoint provided with the

floating IPaddress for aVSIin theVPC network. - Route to a

private load balancerin theVPC network. Here is a simple example configuration for theNgnix load balancerin a GitHub project I made: Example configuration of the Ngnix load balancer - Access the

example applicationin theOpenShift clusterusing aservice. Here is a simple example configuration for the service in a GitHub project I made.

The setup in IBM Cloud¶

Now let us follow the main steps in more detail, by using an example application written in Node.js.

Just as a reminder these are the main steps:

- Setup

VPCandOpenShiftonIBM Cloud. - Deploy an

example applicationtoOpenShift. - Create a

private load balanceras aservicefor theexample application. - Setup a

VSIand configureNgnix as load balancer - Verify the setup is working.

Step 1: Setup VPC and OpenShift on IBM Cloud¶

The setup is already described in this blog post I wrote some times ago and we can follow these steps. But, here is an extract of the main steps we need to do.

- Create a

Virtual Private Cloud. - Rename the automated created elements of the

Virtual Private Cloud. - Create a

Public Gatewayin theVirtual Private Cloudand attach it to theSubnetyou plan to use. - Create the

Cloud Object Storage. - Create the

Red Hat OpenShift cluster.

Step 2: Deploy an example application to OpenShift¶

Now we will deploy my prefered example application to the Red Hat OpenShift cluster and these our two next tasks.

- Get the example source code.

- Deploy the

example application.

2.1. GET THE EXAMPLE SOURCE CODE¶

Step 2.1.1: Open the IBM Cloud Shell from the IBM Cloud Web UI or open a terminal.

If we going to use a terminal on our local computer, we need to ensure we have installed the needed CLIs and tools. These are the CLIs and tools for the script automation.

- bash shell

- oc – OpenShift Command Line Interface (CLI)

- IBM Cloud CLI

- IBM Cloud VPC infrastructure plugin

- Sed

- JQ

- grep

- awk

- git

Step 2.1.2: Clone git repository¶

git clone https://github.com/thomassuedbroecker/vend

cd vend

Step 2.1.3: Checkout branch¶

git checkout -b vend-internal-vpc-route

Step 2.1.4: Open the /openshift/scripts folder¶

cd /openshift/scripts

2.2 DEPLOY THE EXAMPLE APPLICATION¶

Now we deploy the example application to OpenShift.

Step 2.2.1: Get an access token and log in to OpenShift¶

oc login --token=[YOUR_TOKEN] --server=[YOUR_SERVER]

Note: We can follow this blog post

Log in to the an IBM Cloud Red Hat OpenShift cluster using the IBM Cloud and OpenShift CLIto do this.

Step 2.2.2: Run the bash script automation¶

Press return and move on when the input is requested.

bash deploy-application.sh

STEP 2.2.3: Verify the vend application output in the browser¶

Get the route URL and open the route URL in a browser.

oc get route | grep "vend"

STEP 2.2.4: Verify the output in your browser¶

Now open the route and verify the output in your browser:

"{\"message\":\"vend test - vend-load-balancer-demo-openshift\"}"

STEP 2.2.5: Verify the vend application log file output, that’s saved in the persistent volume claim.¶

- Get the running container in the pod

oc get pods | grep "vend"

Example output:

vend-6879fc49cc-fv72d 1/1 Running 0 3d3h

- Access the running pod on its command line

oc exec vend-6879fc49cc-fv72d cat ./logs/log.txt

Example output:

kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl exec [POD] -- [COMMAND] instead.

*** INF0: 2021-11-7 (XX:XX:30) [1636XXX130402] VEND_USAGE : vend-demo-secret-openshift

*** INF0: 2021-11-7 (XX:XX:30) [1636XXX30403] USER : user

*** INF0: 2021-11-7 (XX:XX:30) [1636XXX30403] USER_PASSWORD : user

*** INF0: 2021-11-7 (XX:XX:30) [1636XXX30403] ADMINUSER : admin

*** INF0: 2021-11-7 (XX:XX:30) [1636XXX0403] ADMINUSER_PASSWORD : admin

*** INF0: 2021-11-7 (XX:XX:30) [1636XXX0407] Info - envDefined==false

*** INF0: 2021-11-7 (XX:XX:30) [1636XXX1906] VEND_USAGE : vend-image-stream-demo-openshift

Step 3: Create private load balancer as a service for the example application¶

Ensure you are in the right directory of the cloned GitHub project. We will use a bash script to create the private load balancer that points to our application.

The bash script creates the service (1) and the private load balancer (2).

The bash script does run following functions in a sequence.

| Function | Notes | |

|---|---|---|

| 1 | setupCLIenv | Configure the IBM Cloud target and group. |

| 2 | getVPCconfig | Get the VPC configuration and save it into variables. |

| 3 | setOCProject | Ensure we are in the right OpenShift project. |

| 4 | preparePrivateLoadbalancerService | Configure the service specifiation yaml file. |

| 5 | createPrivateLoadbalancerService | Apply the service configuration file and verify the private load balancer exists |

| 6 | displayEndpoints | Shows the endpoint URLS using VSI floating IP we can use from the internet and private load balancer host name which we can use inside VSI instance in our vpc. |

This is the service configuation template that the bash script uses. In our case we only using two annotations:

service.kubernetes.io/ibm-load-balancer-cloud-provider-ip-type: "private"service.kubernetes.io/ibm-load-balancer-cloud-provider-zone: "VPC_ZONE"

With that configuration we map the service to the example application:

selector:

app: vend-app

ports:

- name: http

protocol: TCP

port: 8080

Note: For more details visit the IBM Cloud documentation.

apiVersion: v1

kind: Service

metadata:

name: APP_NAME-vpc-nlb-VPC_ZONE

labels:

customerLoadbalancer: vend-application

app: vend-app

annotations:

service.kubernetes.io/ibm-load-balancer-cloud-provider-ip-type: "private"

service.kubernetes.io/ibm-load-balancer-cloud-provider-zone: "VPC_ZONE"

spec:

type: LoadBalancer

selector:

app: vend-app

ports:

- name: http

protocol: TCP

port: 8080

externalTrafficPolicy: Local

STEP 3.1: Open the /openshift/scripts folder¶

cd /openshift/scripts

Step 3.2: Run the bash script automation¶

Press return and move on when the input is requested.

bash create-private-load-balancer.sh

Step 3.3.: Verify the setup¶

We can open the OpenShift web console and verify the service creation. This is an example result in the OpenShift web console.

We can open the IBM Cloud web UI with following link:

https://cloud.ibm.com/vpc-ext/network/loadBalancers

That link opens the load balancers listed in the VPC.

Step 4: Setup VSI and configure Ngnix¶

Setup the VSI using Red Hat Enterprise Linux (RHEL).

To create a VSI you can follow the blog post How to create a single virtual server instance (VSI) in a virtual private cloud (VPC) infrastructure on IBM Cloud. The difference is we need to select Red Hat Enterprise Linux 8.4. as operating system when we follow the exact steps in this older blog post I wrote some times ago.

After we created a VSI we need to follow these remaining steps:

- Access the

Virtual Server InstanceonIBM Cloud. - Install basic tools to the minimal installation

RHEL. - Install and configure

Ngnix.

4.1 ACCESS THE VIRTUAL SERVER INSTANCE ON IBM CLOUD¶

Step 4.1.1: Log in to IBM Cloud¶

ibmcloud login (-sso)

Step 4.1.2: Set your region settings for IBM Cloud¶

export RESOURCE_GROUP=default

export REGION=us-south

ibmcloud target -g $RESOURCE_GROUP

ibmcloud target -r $REGION

Step 4.1.3: Get the ip address for the VSI¶

- List the existing

VSIs

ibmcloud is instances

- Insert the name of your

VSIand insert command below

export VSI_NAME=YOUR_VSI_NAME

FLOATING_IP=$(ibmcloud is instances | grep "$VSI_NAME" | awk '{print $5;}')

echo $FLOATING_IP

Step 4.1.3: Get your SSH key¶

cd ~/.ssh

ls

Example output:

id_rsa id_rsa.pub

Step 4.1.4: Insert your SSH key name in the command below¶

PATH_TO_PRIVATE_KEY_FILE=id_rsa

ssh -i $PATH_TO_PRIVATE_KEY_FILE root@$FLOATING_IP

- Possible problem

@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@

@ WARNING: REMOTE HOST IDENTIFICATION HAS CHANGED! @

@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@

IT IS POSSIBLE THAT SOMEONE IS DOING SOMETHING NASTY!

Someone could be eavesdropping on you right now (man-in-the-middle attack)!

It is also possible that a host key has just been changed.

The fingerprint for the ED25519 key sent by the remote host is

SHA256:0tzMCJXYbG2MVnsn4gImE

Please contact your system administrator.

nano known_hosts

Step 4.2 Configure the RHEL VSI¶

Step 4.2.1: Activate webconsole¶

systemctl enable --now cockpit.socket

Step 4.2.2: Configure vpcuser¶

We configure the automated created user called vpcuser that has also administration rights. When we give this user a password, we can also access the VSI with just using user id and password.

Reminder: By default it is only possible to access the

VSIwith anSSH key.

- Change pw

passwd vpcuser

- Log in as vpcuser

su - vpcuser

- Back to root user

exit

- List all users

cat /etc/passwd

Step 4.2.3: Install nano¶

Nano is an useful text editor easier to use than vi.

sudo dnf install https://dl.fedoraproject.org/pub/epel/epel-release-latest-8.noarch.rpm

sudo dnf upgrade

yum install nano

Step 4.2.4: Install telnet and dnslookup¶

We install these useful tools to support dnslookup and telnet in case we need it later.

dnf update -y

dnf install bind-utils -y

dnf install telnet

STEP 4.3 INSTALL AND CONFIGURE NGNIX¶

Based on the known information of the private load balancer in VPC, we do install and configure the Ngnix load balancer on the VSI.

Step 4.3.1 Install Nginx¶

dnf install nginx

Step 4.3.2: Start Nginx as a service on RHEL¶

systemctl start nginx

systemctl enable nginx

Example output:

Created symlink /etc/systemd/system/multi-user.target.wants/nginx.service → /usr/lib/systemd/system/nginx.service.

Step 4.3.3: Configure the firewall¶

firewall-cmd --zone=public --permanent --add-service=http

firewall-cmd --reload

Step 4.3.4: Configure Nginx load balancer¶

- Open the

nginx.conffile

nano /etc/nginx/nginx.conf

- Replace the existing Nginx configuration file content, with the content of Ngnix configuration which was created by the bash script. The created file we should find in that directory

openshift/vsi-ngnix/ngnix.conf.

We find the example template here.

error_log /var/log/nginx/error.log;

events {}

http {

log_format upstreamlog '** [$time_local] - $server_name to: $upstream_addr - ["$request"] '

'[host: $host] [x-forwarded-host: $server_name] proxy-add [$proxy_add_x_forwarded_for]'

'[real ip: $remote_addr] [upgrade: $http_upgrade]';

upstream vend {

server LOADBALANCER_HOSTNAME:8080;

}

access_log /var/log/nginx/access.log upstreamlog;

server {

listen 80;

server_name vend;

location / {

proxy_redirect off;

proxy_pass http://vend;

proxy_http_version 1.1;

proxy_set_header host $host;

proxy_set_header x-forwarded-host $hostname;

proxy_set_header x-forwarded-for $proxy_add_x_forwarded_for;

proxy_set_header x-real-ip $remote_addr;

proxy_set_header upgrade $http_upgrade;

proxy_set_header connection "upgrade";

}

location /nginx_status {

stub_status;

}

}

}

- Restart

systemctl is-enabled nginx

systemctl restart nginx

- (Optional) Test the configuration

nginx -t

- (Optional) Inspect the access.log

more /var/log/nginx/access.log

Step 5: Verify the setup¶

We can open a Safari browser and insert the floating ip address of the VSI.

or we just insert following cURl command in a terminal.

curl -i http://YOUR_FLOATING_IP_FOR_THE_VSI

Example output:

TTP/1.1 200 OK

Server: nginx/1.14.1

Date: Sun, 19 Dec 2021 18:11:38 GMT

Content-Type: application/json; charset=utf-8

Content-Length: 65

Connection: keep-alive

X-Powered-By: Express

Access-Control-Allow-Origin: *

WWW-Authenticate: Basic realm="example"

ETag: W/"41-mJId5GtTq6pvcI+uaySnp04Kvl0"

"{\"message\":\"vend test - vend-load-balancer-demo-openshift\"}"

We can open the running container in the pod and verify the log.txt and we see the full invocation for our application.

Summary¶

This blog post shows, it is useful to know in more detail the VPC resources and their dependencies, the application it self and the deployment on Red Hat OpenShift.

Let us list the topics we touched in the blog post:

- The network components in

VPC - The operating system, here we used Red Hat Enterprise Linux 8,4.

- The

Node.jsapplication implementation - The configuration on a

Ngnix load balancer - The

Kubernetes/OpenShiftconfigurations - The

IBM Cloudusage

Useful links and additional information¶

- Install Nginx

- Nginx load balancer

- Restart Nginx

- Uninstall Nginx

- Virtual Server Instance (VSI)

- About IBM Cloud Application Load Balancer for VPC

- Setting up an Application Load Balancer for VPC

I hope this was useful to you and let’s see what’s next?

Greetings,

Thomas

#redhat, #openshift, #vsi, #nginx, #vpc, #ibmcloud, #nodejs, #bash, #rhel