Over the last years and months, I’ve had countless conversations — in projects, meetings, and late-night chats — about Generative AI combined with Agents (Agentic AI). Again and again, I heard the same tension: “We need deterministic systems.” And again and again I felt the same pushback inside me… until I stepped back and connected a few personal dots.

This post is not a tutorial. It’s my experience and my personal result of many exchanges and perspective shifts — including great discussions with Max Jesch and Niklas Heidloff , and many others, about introducing AI agents in enterprises. I’m simply sharing what touched me, hoping it’s useful for your decisions in the remaining time of 2025.

Short version: Agentic AI won’t be 100% “right.” It must be trustworthy enough to operate under risk — just like every other technology we already rely on.

Table of Contents

- Private vs. Business vs. Enterprise: Different Contexts, Different Expectations

- Two Stories That Changed How I Think About “Deterministic”

- From “Deterministic” to “Trustworthy”

- What “Trustworthy Enough” Looks Like in Practice

- A Reality Check: The World Is Moving — Fast

- My Personal Takeaway

- Further Reading (context I found helpful)

- References (short quotes)

1. Private vs. Business vs. Enterprise: Different Contexts, Different Expectations

In our private life, we often start with less domain knowledge, and we’re amazed at how quickly AI (ChatGPT, Claude, Suno, Sora, and more) gives us a useful answer, a starting point, or a creative idea. “90% right” feels magical because it unlocks momentum.

In business — and especially in enterprise environments — the expectations are changing:

- More proprietary and regulated data, more processes, more stakeholders

- Failure modes include reputation, compliance, and legal consequences

- Expectations shift from “helpful” to “precise and auditable”

That’s exactly the gap Max Jesch and I see in practice — the difference between private delight and enterprise responsibility is real, and it needs deliberate handling, not wishful thinking. (Podcast series, German.) assono.de

For a deeper dive into how I frame those expectations, here’s my earlier post: The Rise of Agentic AI and Managing Expectations.

2. Two Stories That Changed How I Think About “Deterministic”

These two personal memories made me rethink the reflex that “AI must be deterministic”—even in critical business contexts. With the right safeguards, accepting some non-determinism can be the right approach.

2.1 Hardware isn’t fully tested, but we fly!

Decades ago, I learned you simply cannot exhaustively test every transistor on a modern processor. And still, we step into planes and cars. Why? Because we engineer for reliability under uncertainty — layered safety, redundancy, monitoring, and incident response. We live with risk and mitigate it.

2.2 Assembler vs. “C and C++” Compilers

Early in my career, some colleagues didn’t trust C/C++ compilers and stayed in Assembler for “control.” But higher-level languages scaled progress by enabling more people to build. We accepted that compilers might not produce the absolute “perfect” machine code, because the system-level outcome (speed of development, maintainability, security patches) was better.

With this in mind: Agentic AI is the next abstraction jump. Without it, we can’t keep up with the exploding volume of information and the coordination load. That doesn’t mean ignoring risk—it means engineering for it, communicating transparently, and keeping accountability with us rather than transferring the accountability to the AI.

3. From “Deterministic” to “Trustworthy”

Given all this, some of us need a mindset shift: stop insisting on determinism when it doesn’t fit the problem. Instead, ask, “What would make this agent trustworthy enough for this use case?” — recognizing that this can be a longer journey for compliance, risk managers, and engineering person.

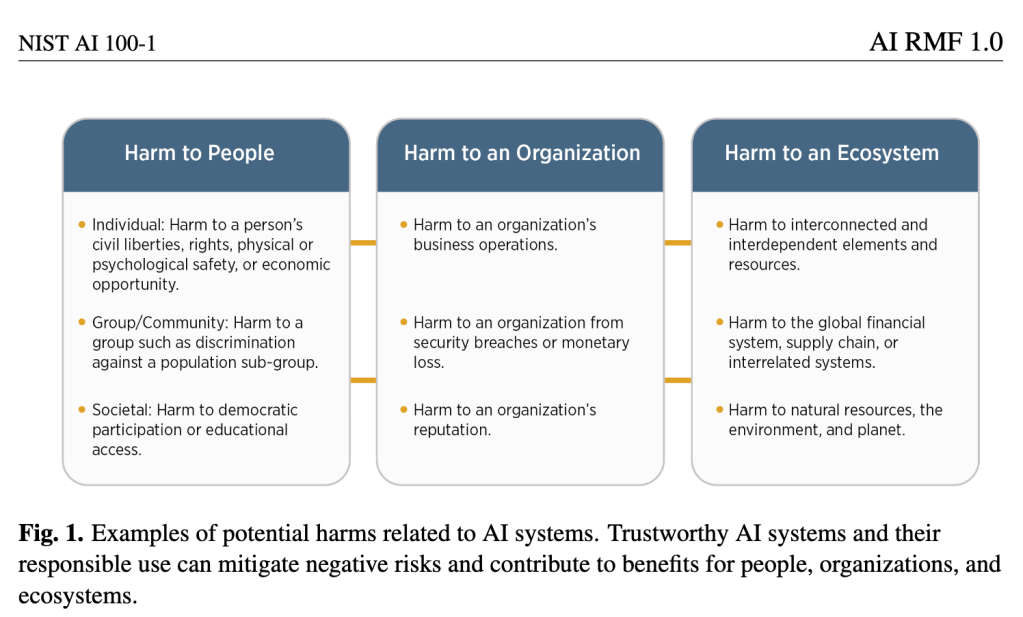

There are organizations that already have a language. The NIST AI Risk Management Framework talks explicitly about building trustworthiness by understanding and managing risks across the AI lifecycle — not promising perfection. NIST Publications

The following image is a screen shot from the NIST document (Harm to People, Harm to an Organization, Harm to an Ecoystem) to show a short extraction:

And yes, “Agentic AI” is no longer just hype.

- Google describes agents that set goals, plan, and execute tasks with minimal human intervention. Google Cloud

- Microsoft calls agents a paradigm shift in how work gets done, and shows them coordinating multi-step tasks in the wild. Source

- IBM uses a similar framing with a strong enterprise angle: agents that pursue goals across the stack with observability, governance, and guardrails — reflected in watsonx Orchestrate and domain agents (e.g., in Maximo). IBM

4. What “Trustworthy Enough” Looks Like in Practice (What We Should Ask Teams)

When we review agentic idea’s in teams or groups, we may should not to ask, “Is it 100% correct?” we should ask:

4.1 Scope & Impact

- What decisions will the agent make?

- What’s the blast radius if it’s wrong?

4.2 Controls & Guardrails

- Human-in-the-loop where it matters (approval, dual control)

- Clear tool permissions and data boundaries (least privilege)

- Observability: logging, tracing, artifacts for post-hoc analysis

4.3 Evaluation

- Offline evals with representative data (not just happy paths)

- Online canarying and feature flags

- Drift monitoring and rollback plans

4.4 Documentation & Explainability

- Decision logs and rationales where feasible

- User-facing disclaimers and UX that set proper expectations

4.5 Operations

- Service Level Objectives SLOs for latency, cost, and accuracy bands

- Incident runbooks: “If X goes wrong, do Y within Z minutes”

If our answers are solid, we’re already engineering for trust — the same way aviation, payments, or cloud reliability are engineered.

This is the perspective I used in my personal posts about testing agents and running them locally with watsonx Orchestrate and watsonx.ai.

5. A Reality Check: The World Is Moving — Fast

With all this in mind, we have to accept that AI will never be fully correct — but it can be trustworthy. Without AI, there’s no realistic way to keep up with the sheer volume of business information and the growing complexity of regulations in today’s faster-changing environment.

That’s exactly why we should double down on the basics before scaling:

- Data quality & provenance over model hype

- Guardrails & governance baked into design (not added later)

- Continuous evaluation (offline + online) with drift checks

- Clear ownership and incident playbooks for when things go wrong

Progress won’t come from pretending AI is deterministic. It will come from engineering for trust while the tools keep rapidly evolving.

6. My Personal Takeaway

We won’t reach a world where an agent is always right. But we can build systems that are trustworthy enough to drive real value — because we combine capable models with good engineering and clear accountability.

That’s risk-taking with eyes open.

And it’s how we’ve always moved to the next abstraction level in computing — from Assembler to compilers, from bare metal to cloud, and now from chat to agents.

If you’re asking yourself with these questions, you’re not alone. I am, too. And I’ll keep sharing what I learning — successes, failures, and all.

Thanks for reading — I’d love to hear your perspective.

7. Further Reading (context I found helpful)

- My post: The Rise of Agentic AI and Managing Expectations — why expectations management matters when moving from chat to agents.

- Podcast (German): KI-Agenten für Unternehmen, Teil 1–3 with Max Jesch — differences between private and enterprise contexts, rollout challenges, and outlook. assono.de

- What is Agentic AI? Definitions and differentiators from Google and perspective pieces from Microsoft. Google Cloud

- Risk & Trust: NIST AI Risk Management Framework overview and the full AI RMF 1.0 PDF. NIST

8. References (short quotes)

- “Understanding and managing the risks of AI systems will help to enhance trustworthiness, and in turn, cultivate public trust.” — NIST AI RMF 1.0. NIST Publications

- “Agentic AI can set goals, plan, and execute tasks with minimal human intervention.” — Google Cloud. Google Cloud

- “AI agents are going to be a paradigm shift in how work gets done.” — Microsoft. Source

- “Build no-code or pro-code agents that tap into your business tools and data.” — IBM watsonx Orchestrate. IBM

- “AI agents for greater automation … Maximo Assistant will orchestrate multiple agents and workflows to take appropriate action.” — IBM Research (Maximo). IBM Research

- “KI-Agenten für Unternehmen … einführen, Herausforderungen und Ausblick.” — Podcast series with Max Jesch (IBM). assono.de

I hope this was useful to you and let’s see what’s next?

Greetings,

Thomas

#ai, #agents, #agenticai, #personalpointofview #myperspective